How to transfer data between Kubernetes Persistent Volumes (quick and dirty hack)

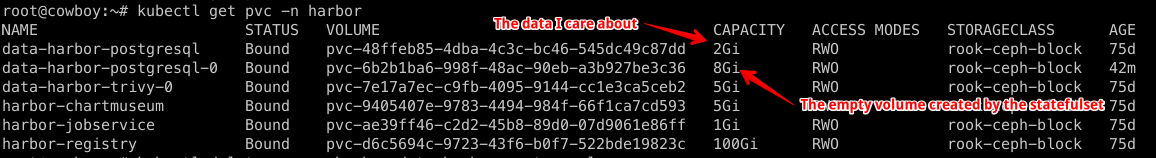

I've recently been using fluxv2's helm controller to deploy applications into multiple clusters. In one instance I was replacing a manually-created 2Gb PVC for a postgres database with a new one created with a statefulset deployed by helm. The original PVC had been created outside of the helm chart values.yaml using existingClaim, and so didn't have the correct name, and wasn't "adopted" by the helm release when I upgraded it (see timestamps below)

I didn't want to have to wipe the database and start from scratch though, since that would represent hours of rework, so I came up with this quick-and-dirty hack:

First, I deleted all the daemonsets and statefulsets in the namespace, leaving the PVCs remaining (but not being accessed):

root@cowboy:~# kubectl delete deployments.apps -n harbor --all

deployment.apps "harbor-chartmuseum" deleted

deployment.apps "harbor-core" deleted

deployment.apps "harbor-jobservice" deleted

deployment.apps "harbor-nginx" deleted

deployment.apps "harbor-notary-server" deleted

deployment.apps "harbor-notary-signer" deleted

deployment.apps "harbor-portal" deleted

deployment.apps "harbor-registry" deleted

root@cowboy:~# kubectl delete statefulsets.apps -n harbor --all

statefulset.apps "harbor-postgresql" deleted

statefulset.apps "harbor-redis-master" deleted

statefulset.apps "harbor-trivy" deleted

root@cowboy:~# kubectl get pvc -n harbor

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-harbor-postgresql Bound pvc-48ffeb85-4dba-4c3c-bc46-545dc49c87dd 2Gi RWO rook-ceph-block 75d

data-harbor-trivy-0 Bound pvc-7e17a7ec-c9fb-4095-9144-cc1e3ca5ceb2 5Gi RWO rook-ceph-block 75d

harbor-chartmuseum Bound pvc-9405407e-9783-4494-984f-66f1ca7cd593 5Gi RWO rook-ceph-block 75d

harbor-jobservice Bound pvc-ae39ff46-c2d2-45b8-89d0-07d9061e86ff 1Gi RWO rook-ceph-block 75d

harbor-registry Bound pvc-d6c5694c-9723-43f6-b0f7-522bde19823c 100Gi RWO rook-ceph-block 75d

root@cowboy:~# kubectl get pods -n harbor

No resources found in harbor namespace.

root@cowboy:~#Then I created a template pod YAML using kubectl run -n harbor datamigrator --image=alpine -o yaml --dry-run=client > /tmp/datamigrator.yaml. Here's what it looked like initially:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: datamigrator

name: datamigrator

spec:

containers:

- image: alpine

name: datamigrator

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}Then I edited my new /tmp/datamigrator.yaml to include two PVCs, and updated the command/argument to sleep for an hour. Now it looks like this:

root@cowboy:~# cat /tmp/datamigrator.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: datamigrator

name: datamigrator

spec:

volumes:

- name: old

persistentVolumeClaim:

claimName: data-harbor-postgresql

- name: new

persistentVolumeClaim:

claimName: data-harbor-postgresql-0

containers:

- image: alpine

name: datamigrator

resources: {}

command: [ "/bin/sleep" ]

args: [ "1h" ]

volumeMounts:

- name: old

mountPath: /old

- name: new

mountPath: /new

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}I deployed the pod by running kubectl apply -f /tmp/datamigrator.yaml -n harbor, and then exec'd into the freshly-created pod by running kubectl exec -n harbor datamigrator -it /bin/ash.

Finally, I wiped out the contents of /new, and moved (in hindsight, it would have been better to copy, to be safe) all the data from /old to /new:

root@cowboy:~# kubectl exec -n harbor datamigrator -it /bin/ash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl kubectl exec [POD] -- [COMMAND] instead.

/ # df

Filesystem 1K-blocks Used Available Use% Mounted on

overlay 51474912 38422156 10414932 79% /

tmpfs 65536 0 65536 0% /dev

tmpfs 131935904 0 131935904 0% /sys/fs/cgroup

/dev/rbd2 1998672 337928 1644360 17% /old

/dev/rbd1 8191416 104400 8070632 1% /new

/dev/mapper/cow0-var 933300992 5867160 927433832 1% /etc/hosts

/dev/mapper/cow0-var 933300992 5867160 927433832 1% /dev/termination-log

/dev/mapper/VG--nvme-containerd

51474912 38422156 10414932 79% /etc/hostname

/dev/mapper/VG--nvme-containerd

51474912 38422156 10414932 79% /etc/resolv.conf

shm 65536 0 65536 0% /dev/shm

tmpfs 131935904 12 131935892 0% /run/secrets/kubernetes.io/serviceaccount

tmpfs 131935904 0 131935904 0% /proc/acpi

tmpfs 65536 0 65536 0% /proc/kcore

tmpfs 65536 0 65536 0% /proc/keys

tmpfs 65536 0 65536 0% /proc/timer_list

tmpfs 65536 0 65536 0% /proc/sched_debug

tmpfs 131935904 0 131935904 0% /proc/scsi

tmpfs 131935904 0 131935904 0% /sys/firmware

/ # ls /old

conf data lost+found

/ # ls /new

conf data lost+found

/ # mv /^C

/ # ls /new

conf data lost+found

/ # mv /old/* /new/

mv: can't remove '/new/conf': Is a directory

mv: can't remove '/new/data': Is a directory

mv: can't remove '/new/lost+found': Is a directory

/ # rm -rf /new/*

/ # mv /old/* /new/

/ # exit

root@cowboy:~#

Having finished with the datamigrator pod, I deleted it, and re-deployed my helm chart. The postgres data is found in the new PVC, and I deleted the old (and now empty) PVC.